Data vs Information

“There was a very bad effect of covid-19 on the stock market. Many companies lost crores of rupees”

The above statement is meaningful in understanding the trend and state of stock market as a whole. But it lacks the specifics, clarity and granularity. That is data for you.

Data can come in many different forms, sizes, formats, patterns… there’s no defined right or wrong, data is just data.

On the other hand, a statement like this

“Due to covid-19, there was an economic downfall which resulted in decrease of 3000 points in the stock market, top 10 companies (as per market cap) lost 12.3% of market cap on average”

has a clear insight, in this case it is to show the decline in market cap of top companies and the amount of decrease in market index. Information is usually derived from data; one might even say the crudest form of insights/information is data.

This distinction is not usually talked about regularly nor is it asked in a quiz however, it makes sense to understand what is what since we aspire to be data professionals.

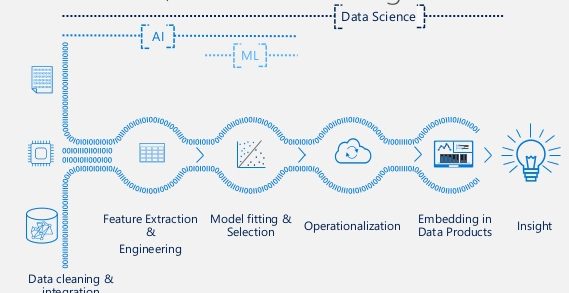

The general idea is to extract information from data and like I said before, data doesn’t necessarily come structured and formatted which is why the need to clean data arises. One might ask, information retrieval by nature is extracting insights from data what is the point of data cleaning then? Simply speaking, insights from bad sources are also going to be bad and hence Data Cleaning.

Data Cleaning

In general, it is said that data cleaning takes maximum amount of time in an analytics project. A general notion is that 90% of the time in an analytics project goes in cleaning the data.

But why is this step so important? Isn’t it overrated?

Imagine you own a Ferrari and you try to run it with kerosine. Well, obviously it won’t work and even if with a remote chance it did work the engine gets shot pretty quickly, that’s because it is not designed to run on kerosine and neither do kerosine has the quality needed for a Ferrari. The same way analytics techniques are designed to draw insights but it all works out only when the data has the quality so our ways are fruitful.

In short, “Garbage In gives Garbage Out”. Moreover, data cleaning brings out the relevance in data to the method being used. Say, we wanted to calculate the product of elements in a user specified list [1,2,3,4,”five”]. Without cleaning we would encounter an error, but swapping the string out for a number brings the relevance and works just fine.

Let’s see some common steps involved in data cleaning. Below is the data

At the first look, we would want to notice a few things:

- Presence of duplicate values

- If there are duplicate values present in the dataset, it would be misleading since the information is repetitive.

- If we encounter duplicate values, we can simply remove them

- Irrelevant entries

- Every dataset has a problem domain, as long as the data is relevant to this problem it’s good enough to work-through. Say, the above dataset is a collection of economic homes clearly, index 10 seems like it’s definitely not an economic house and hence can be called an irrelevant entry

- Even when there’s one irrelevant datapoint we still delete it

- Aggregation (Granular consistency)

- Sometimes we have a row showing some aggregations like sum, mean… it creates inconsistency and hence needs to be removed

- Consistency in units of measurement

- The final column SQ_FT is supposed to be the area in square feet and when a datapoint has different measurement the meaning changes altogether so we need to ensure the conversion of metrics

- Structural errors

- Structural errors are the spelling mistakes, case differences and others that are expected to be standard. In this case the index 11 has berkeley in small case while everywhere else it’s capital, also the NUM_Bath column has an itruder – HURLEY. It’s pretty straight forward how we fix it – adjust the existing structure

But this is all just the beginning, if we realize the above are present in the data, we must then deal with it. Apart from those, we might also have to deal with a few other things:

- Null/ missing values

- Missing values can be standard or non-standard where standard can be identified by pandas the others are supposed to be explicitly identified

- It is also sometimes necessary to understand the sources of these missing values to come up with a strategy to deal with them

- Most common practices to deal with missing values are imputation and deletion

- Outliers

- Outliers might not in every case cause as much damage as the missing ones but it is important to understand their effect nonetheless

- Dealing with outliers is pretty synonymous to missing values we can cap the outliers to a lower value, impute with a statistic or delete them

- Sometimes it also makes sense to perform a separate analysis for the outliers only

- Relevance of data types

- Though it seems obvious, ignoring this aspect might really turn out to be a bummer since it can abrupt a long running code

- The idea is pretty simple: each variable has a most fitting datatype and we need to ensure any new data fits the picture

These few methods though obvious go a long way in making an analyst’s life easier… at least to start off. Down the road you might find more interesting techniques that one might’ve never thought would be needed.

Check out our courses for the whole code on this and more topics related to data science: Here

-By Guru Manikanta